Table of Contents

Related Blogs

Generative AI is transforming software development faster than any technology in recent memory.

More than 76% of developers say they already use AI-assisted coding tools. Reports also show developers can complete tasks ~55% faster with AI code suggestions.

Yet for many executives, the promise of AI hasn’t translated into measurable impact. In a recent survey of CIOs and IT leaders, only 32% actively measure both revenue impact and time savings from their AI investments.

The Illusion of Instant Coverage

AI-driven test generation looks like a breakthrough. Feed your codebase to a model, and within seconds it can produce thousands of new test cases. The promise of broader coverage and faster automation is hard to ignore.

But more tests don’t automatically mean better tests.

Even well-designed frameworks that are refined over years of best practices can break when fed low-quality or outdated AI-generated code.

For instance, with the release of Appium 3, many syntax and capability updates render previous Appium 2 examples obsolete. Yet most large language models still default to older patterns unless you’re extremely explicit.

AI-generated code often looks correct while hiding subtle issues that only appear during execution. Engineers then spend hours debugging locator mismatches, dependency conflicts, and brittle assertions — time that negates any initial productivity gains.

In a DevOps.com survey, 60% of organizations admitted they lack a formal process to review or verify AI-generated code before it enters production.

This overconfidence, known as automation bias, is becoming one of the quietest risks in modern software delivery.

Worse, behavioral research shows humans tend to trust AI outputs even when they’re wrong, often overlooking inconsistencies or context gaps. As dependence grows, critical thinking can start to fade. Not because humans know less, but because we assume the machine already did the hard part.

That’s why a clear foundation rooted in standards, frameworks, and feedback loops becomes essential before introducing AI into testing.

Foundations First: Designing for AI, Not Around It

Before asking an LLM to generate tests, first decide what “good automation” looks like for your organization.

That foundation determines whether AI will accelerate progress or amplify inconsistency.

Establish the basics:

- Define your test architecture (e.g., BDD with reusable components).

- Maintain a consistent locator and naming strategy.

- Create a baseline repository of high-quality test examples — your “gold standard”.

Once this structure exists, then bring AI into the process. Feed the model those baseline examples and prompt it to produce snippets that match your established framework. This turns the AI from a script generator into a learning collaborator.

Guardrails for GenAI in Test Automation

Once AI becomes part of your workflow, the challenge shifts from generation to governance. Having a solid framework is step one. Maintaining discipline as AI accelerates output is step two.

AI-generated code should follow the same principles as any DevOps-aligned automation practice: governance, feedback, and continuous improvement.

Innovation strategist Jeremy Utley captures this mindset perfectly in his essay “Teammate, Not Technology”, arguing that AI performs best when treated like a colleague, not a replacement.

The same logic applies to test automation:

- Give AI context. Like a new engineer, it needs examples and guidance to understand your standards.

- Review its work. Every suggestion is a draft, not a decision.

- Provide feedback loops. The more you correct and refine, the smarter the output becomes.

- Keep humans accountable. AI can’t interpret business logic, prioritize risk, or understand user intent. People still define what “good” looks like.

This mindset turns AI from a productivity tool into a collaborator that scales your team’s best practices, rather than diluting them.

Connecting to DevOps: From Code Explosion to Controlled Flow

In mature DevOps environments, quality is measured by signal-to-noise ratio, not by how many tests are run.

Without structure and guardrails, AI can flood pipelines with unstable tests that slow feedback and inflate maintenance costs.

When aligned with DevOps principles, AI-driven testing becomes intentional:

- Traceable: Every test maps back to a requirement or defect.

- Maintainable: Reusable components minimize duplication.

- Continuous: RCA and analytics data refine future AI outputs.

The goal isn’t to automate everything, it’s to automate meaningfully, ensuring each AI-generated test contributes to faster, higher-quality releases.

For leaders, the real opportunity isn’t in adopting AI faster, but in adopting it wisely — with structure, accountability, and intent.

Conclusion

Generative AI is transforming how tests are written, but it’s still up to us to decide how they’re used.

AI will always move faster, but speed without direction can be costly. The goal isn’t to create more tests, it’s to create reliable tests that improve quality and drive confidence.

The future of testing belongs to teams that combine human insight, structure, and discipline with AI’s scale and speed. When we treat AI as a teammate, not just a technology, we stop chasing automation for its own sake and start building quality that lasts.

Talk with our experts about designing a governance-first approach to AI in software delivery.

Explore

What's New In The World of Digital.ai

Escalations Aren’t Noise: They’re Your Most Honest Quality Signal

Most companies insist they care about product quality. Yet many…

Automating QA for Automotive Applications

Whether you’re building a music app, an EV charging service,…

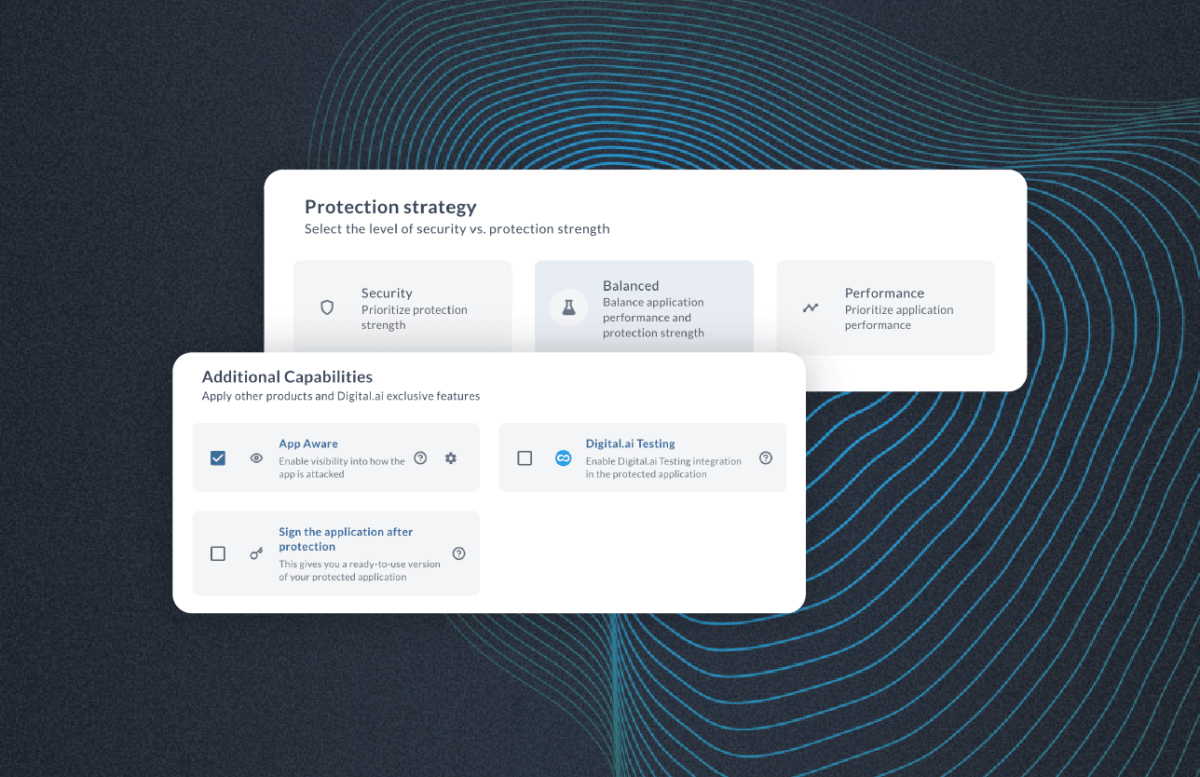

When AI Accelerates Everything, Security Has to Get Smarter

Software delivery has entered a new phase. Since 2022, AI-driven…