Table of Contents

Related Blogs

If you believe the marketing brochures, AI is about to fix your broken tests, write your new ones, and maybe even pass that one flaky test that only fails on Fridays. But if you talk to the engineers actually using it, the story is different.

The reality of 2025 is that AI has moved from “experimental toy” to “business-critical tool”, but it is arguably not the autonomous replacement many promised. Here is a breakdown of where AI is failing to deliver and where it is actually generating ROI.

Why AI in Testing is Attractive

Teams are not chasing AI in testing for fun; they are trying to survive shrinking release cycles and exploding system complexity. The pitch is simple: more coverage, less maintenance, and faster feedback.

The promised benefits are clear:

- Higher regression coverage via AI-generated or AI-optimized test suites.

- Reduced maintenance through self-healing locators and smarter test selection.

- Faster bug detection with predictive analytics and AI-assisted reporting.

The question is not whether AI can help, but where it actually does reliably today and where it quietly becomes another source of risk and waste of time.

Where AI is Already Useful

There are several areas where AI in testing is delivering real value when used with clear constraints.

1. Test Case Generation and Optimization

- NLP-based tools can derive initial test scenarios from requirements or user stories, giving teams a solid first draft instead of a blank page.

- Emerging agent-based approaches (like Appium MCP) are taking this further. Instead of just reading text, an AI agent can physically explore a mobile application interactively to verify element selectors and generate reliable, functional code drafts based on actual interaction rather than guessing.

- ML models can prioritize regression suites based on historical failures and code change patterns, shrinking execution time while preserving risk focus. The biggest efficiency win isn’t writing tests faster, but running fewer of them. Meta pioneered “Predictive Test Selection” in 2019 to handle their massive monolithic codebases.

- How it works: Instead of running every test on every commit, they use a machine learning model (specifically Gradient Boosted Decision Trees) to analyze code changes, file dependencies, and historical failure rates. The model predicts which tests are relevant to the specific code change. This allows them to skip roughly 66% of their test suite on every run while still catching 99.9% of regressions, effectively cutting their infrastructure bill in half.

2. Maintenance and Flakiness Reduction

- Self-healing locators can automatically update UI element references when attributes change in predictable ways, preventing tests from breaking due to minor cosmetic updates.

- A prime example is Healenium, an open-source library that acts as a proxy between your test code and Appium.

- How it works: If a standard selector (like

id="submit-btn") fails, Healenium catches theNoSuchElementException, scans the current page’s DOM, and uses a Longest Common Subsequence (LCS) algorithm to find the element that looks most like the missing one (e.g.,id="submit-btn-v2"). It “heals” the test at runtime so that execution continues uninterrupted, and logs the change for the engineer to review later.

- Pattern analysis on past runs helps identify truly flaky tests versus legitimate failures, improving signal-to-noise ratios in CI pipelines.

- ReportPortal is a great open-source option. It aggregates test results from any framework (JUnit, TestNG, Cypress, etc.) and uses an ML-based Auto-Analysis engine.

- How it works: When a test fails, the AI scans the stack trace and error logs. It compares this failure against the history of all previous failures. If it finds a matching pattern (e.g., “this looks 95% like the ‘Database Timeout’ issue you marked as a system bug last week”), it automatically categorizes the failure for you. This saves teams from manually triaging the same known issues every morning.

3. Analytics, Prediction, and Reporting

- AI models can mine defect logs and execution data to highlight modules with disproportionate failure risk.

- Generative models can convert unstructured feedback (support tickets, reviews) into structured bug reports with suggested severity and reproduction steps.

The Verdict

When used in the right places, AI takes care of repetitive, pattern-heavy work so QA teams can focus on what truly determines whether software is ready to ship.

Where AI is Overhyped or Failing

The pain starts when organizations expect AI to replace judgment, context, or domain expertise instead of simply automating grunt work.

- “One-Click” Autonomous Testing – Generating thousands of scripts from requirements looks impressive on a dashboard, but many of those tests are redundant, low-value, or misaligned with real user risk. Teams often spend so much time validating and repairing AI-generated tests that the net productivity gain disappears.

- Lack of Domain and Business Understanding – AI models do not understand regulations, business rules, or domain risk; they see patterns, not consequences. Critical defects in areas like pricing, medical dosage, or financial compliance frequently require deep domain reasoning that current AI tooling cannot reproduce reliably.

- The “Token Trap” and Financial Reality – While vendors pitch “autonomous agents”, they rarely mention the operating bill. Running high-reasoning LLMs (like GPT-5.2) on massive regression suites is exponentially expensive. A single “agentic” test run can burn thousands of tokens “thinking” about every click. Multiply that by 5,000 nightly tests, and your CI bill can explode right under your nose. Also, there is The Verification Tax: The real cost is human time. Senior engineers often spend more time debugging an AI’s “almost correct” code – which often lacks structure or reusability, than they would have spent writing it cleanly from scratch.

- The Data, Integration, and Complexity Tax – Tools need high-quality, well-structured data (logs, histories, labels); many organizations simply do not have this, leading to unreliable predictions and noisy insights. Furthermore, integrating AI platforms into heterogeneous CI/CD pipelines and legacy stacks introduces complexity that can offset gains, especially for smaller teams.

The Risk

AI can make your dashboards greener and your pipelines faster, without making your software any safer. Judgment, context, and accountability still matter. Bugs may not show up in dashboards, but they will show up for customers.

Pragmatic Guidelines for Using AI in Testing

A reality check does not mean “do not use AI”; it means designing for incremental, testable value instead of the wholesale replacement of existing practices.

Practical principles to follow:

- Start with one well-bounded use case: Pick a domain like regression test selection, flaky test detection, or performance diagnostics. Measure impact objectively (e.g., reduced runtime, fewer flaky failures). Avoid spreading AI thinly across many areas before you have proof it works in one.

- Keep humans in the decision loop: Use AI to propose tests, priorities, and insights; let experienced QA and domain experts validate what actually ships. Treat AI outputs as recommendations, not ground truth, especially in safety-, finance-, or compliance-critical systems.

- Invest in data and observability: Standardize defect taxonomy, logging, and test result storage so AI models have clean, consistent data to learn from. Monitor model behavior over time; if predictions drift or noise creeps up, tune or retrain instead of blindly trusting earlier results.

Under these conditions, AI becomes a powerful assistant embedded in the testing workflow, not a brittle black box sitting on top of it.

A Realistic Future for AI in QA

The near-term future is not fully autonomous testing that replaces QA teams; it is workflow-aware assistants woven through planning, design, execution, and analysis.

Tools will get better at:

- Translating requirements into starting-point test assets.

- Surfacing risks earlier using historical and production data.

- Automating the tedious glue work across tools and environments.

But human testers will remain central for:

- Understanding business impact and regulatory context.

- Designing meaningful experiments and exploratory charters.

- Deciding what “good enough” quality really means for a release.

AI will change how testing is done and what skills matter, but it will not absolve teams from owning quality—if anything, it raises the bar on how strategically QA leaders use their tools.

Reevaluate your AI testing tools to ensure they reduce real risk—not just pipeline runtime.

Explore

What's New In The World of Digital.ai

When AI Accelerates Everything, Security Has to Get Smarter

Software delivery has entered a new phase. Since 2022, AI-driven…

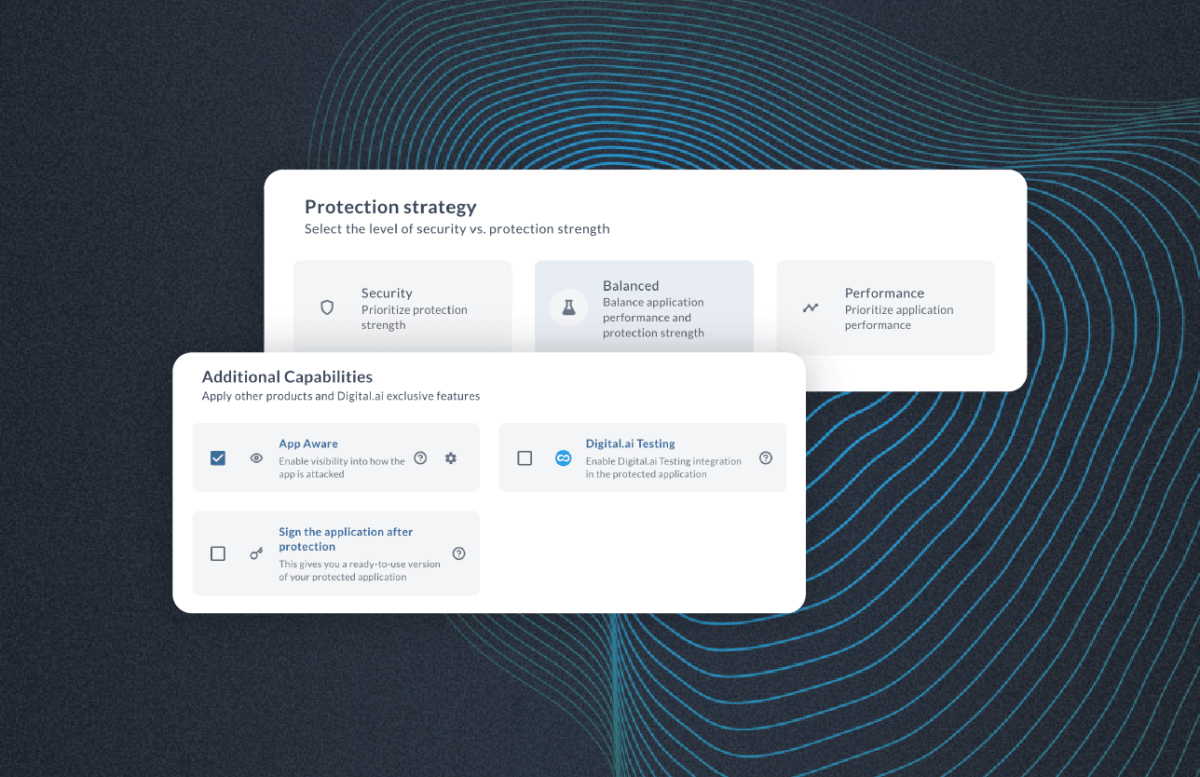

The Invisible Wall: Why Secured Apps Break Test Automation

Modern mobile apps are more protected than ever. And that’s…

Shared, Not Exposed: How Testing Clouds Are Being Redefined

The Evolution of Device Clouds: From Public to Private to…