Table of Contents

Related Blogs

What is Edge AI?

Edge AI is the deployment of AI applications in devices throughout the physical world. It’s called “edge AI” because the AI computation is done near the user, at the edge of the network, close to the location of the data rather than centrally in a cloud computing facility or private data center. Since the internet has a global reach, the edge of the network can connote any location. It can be a retail store, a factory, a hospital, or IoT devices all around us, like traffic lights, security cameras, medical devices, sensors, autonomous machines, and phones.

Why is Edge AI Needed?

Having a central AI computational hub in the cloud is not always efficient. Some AI technologies require low latency between data gathering and decision-making. Many AI computational tasks are too demanding to be computed for thousands of concurrent users. Also, there are security and privacy concerns about sensitive data, like PII, being transferred over the network and processed remotely.

Since AI algorithms can understand language, sights, sounds, smells, temperature, faces, and other analog forms of unstructured information, they’re particularly useful in places occupied by end users with real-world problems. These AI applications would be impractical or even impossible to deploy in a centralized cloud or enterprise data center due to latency, bandwidth, and privacy issues.

Edge AI Tradeoffs

One of the biggest benefits of Edge AI is that AI devices are close to the places where they are applied. AI decentralization greatly reduces the processing stress on the central processing hub, reduces data transmission costs and network stress, and reduces risks by eliminating the need to transfer sensitive data over the network. The biggest drawback of Edge AI is that AI algorithms and associated IP outside of the controllable or trusted environment.

Edge AI Attack Surfaces and Mitigations

The fact that Edge puts AI devices outside of the trusted environment completely changes the size and shape of the attack surface. The Edge AI ecosystem can be dissected into smaller components. In the following section, we will describe the main components of Edge AI technology to identify security risks, attack surfaces, and mitigation options.

AI model

Securing AI models in AI applications is highly important because it helps protect them from unauthorized access and misuse. These models are the result of extensive research and hold valuable information. If they aren’t properly secured, threat actors can cause various problems. For example, they can manipulate the model’s output to spread false information or engage in fraud (evasion and adversarial attacks). Additionally, they can modify the model’s algorithms to make it perform poorly or behave unfairly. This can result in catastrophic loss if it occurs in critical industries like self-driving cars, healthcare or infrastructure. Meanwhile, enterprises whose Edge AI models are stolen, leaked, or adapted can suffer serious financial damage. Overall, securing AI models prevents unauthorized use and helps ensure that artificial intelligence is deployed responsibly and ethically.

Mitigations

- AI model encryption – The AI model is a valuable intellectual property of the company, so as a baseline security measure, the AI model should be kept encrypted on the disk. There is a risk that the AI model could be dumped from the app’s memory. Usage implementation should consider this risk and not keep the raw model loaded in the memory or at least unload it as soon as the AI engine parses it. In addition, because white-box cryptography prevents “break once run everywhere” (BORE) attacks, consider a white box cryptography solution.

- AI model embedding – When Edge AI requires that AI models are embedded within applications, ensure that the application hosting the AI model is protected with hardening tools, and communications to and from the application are protected with a White Box Cryptography solution.

Data

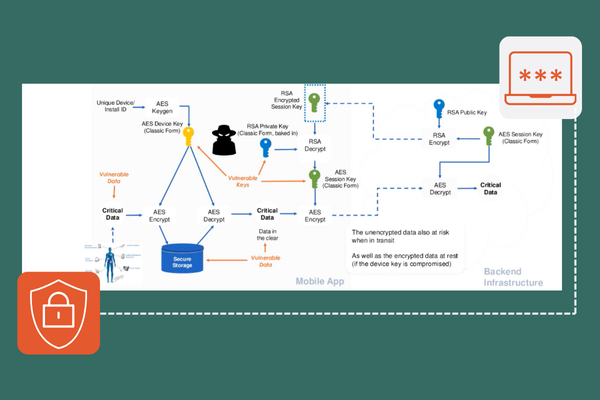

Securing AI data in Edge AI applications is highly important due to the potential risks associated with unauthorized access and misuse. Edge AI applications often operate on devices with limited computational power and storage capacity, necessitating the storage and processing of sensitive data locally. This can lead to privacy breaches, where personal or confidential information is exposed. Moreover, malicious actors can leverage the acquired AI data to train their models or extract valuable insights, potentially leading to intellectual property theft or unfair competitive advantages. Additionally, tampering with AI data in Edge AI applications can result in the manipulation of AI algorithms, leading to biased or distorted outcomes, aka “data poisoning attacks”. This can have severe consequences in critical areas such as healthcare diagnosis or criminal databases.

Mitigations

- Data encryption – Data and AI IP are typically safeguarded through encryption and security protocols when at rest (storage) or in transit over a network (transmission). But during use, such as when they are processed and executed, they become vulnerable to potential breaches due to unauthorized access or runtime attacks. For this reason, organizations should avoid putting encryption keys in the clear during the entire lifecycle of the application through the use of whitebox cryptography methods.

- Confidential computing – addresses the gap of protecting data that is in use by performing computations within a secure and isolated environment within a computer’s processor, also known as a trusted execution environment (TEE). On the other hand, the use of TEE exposes hardware component as an attack vector. More information can be found here.

- Secure data transit over the network – sometimes attackers can take advantage of the backend API to make forged requests or obtain additional data. API protection would introduce an additional layer of data encryption and prevents request replay and forgery attacks.

AI Applications

Securing Edge AI applications is crucial because threat actors can reverse engineer and tamper with them, extracting valuable knowledge for their advantage. Without proper security measures, threat actors can exploit vulnerabilities in these applications to gain unauthorized access to the stored data. Threat actors who reverse engineer apps uncover proprietary algorithms and models, thus compromising intellectual property. Knowledge gained through reverse engineering could be used to create new AI applications with stolen AI models. Tampering allows them to modify the application, leading to biased results or unauthorized data access. Strong security measures are necessary to protect Edge AI applications, ensuring data integrity, safeguarding intellectual property, and maintaining a fair, competitive environment.

Mitigations

- Anti-tampering mechanisms – Code tampering detection techniques like code checksumming will detect attempts to change app behavior. Whitebox cryptography should be used in untrusted environments to prevent BORE “break once run everywhere” attacks, especially where application interacts with encrypted files like AI data and models.

- Anti-static analysis – Code obfuscation and transformation greatly increase the time to reverse engineer the business logic and sometimes even completely prevents it.

- Anti-dynamic analysis – Dynamic analysis is crucial to understanding how software behaves and how stopping dynamic analysis in time can prevent further software analysis

- Anti-code lifting mechanisms – Methods like code damaging, masquerading and self-healing prevent attackers from lifting code and running it on different device than initially intended.

- Platform integrity checks – Software can be extracted from the Edge AI device and executed in an alien environment, or the Edge AI device can be tampered somehow. Checking platform integrity can alert about attempts of reverse engineering of the app.

All this can be achieved with application hardening tools. For a guide on how to incorporate App Hardening in to your DevSecOps practice, see the IDC Spotlight paper here.

Hardware

Because it lives on the Edge, outside of the security perimeter, Edge AI hardware can be vulnerable to attacks by threat actors. These attacks have significant consequences. Attackers may exploit vulnerabilities in the hardware to gain unauthorized access, disrupt operations, or extract sensitive information. They could carry out physical attacks, such as tampering with the device’s components, compromising its functionality, or stealing valuable data stored within. Additionally, attackers may target the firmware or software components of the Edge AI hardware, injecting malicious code or malware that can compromise the system’s security and integrity. The results of such attacks can range from unauthorized access to sensitive data, privacy breaches, or disruption of critical services relying on the Edge AI hardware. Furthermore, compromised Edge AI hardware can be an entry point for broader network attacks, enabling threat actors to infiltrate connected systems or launch larger-scale cyber-attacks.

Mitigations

To mitigate these risks, implement robust security measures, including; hardware-level security features, regular firmware updates, and comprehensive monitoring to detect and prevent unauthorized access or tampering attempts on Edge AI hardware.

Summary

Edge AI refers to deploying AI applications on devices in the physical world, closer to where data is generated, rather than relying on centralized cloud computing. It offers several advantages, such as low latency, improved privacy, reduced network stress, and decreased data transition costs. However, the decentralized nature of Edge AI introduces new security risks and attack surfaces. The main components of Edge AI, including AI models, data, AI applications, and hardware, need to be secured to prevent unauthorized access, misuse, tampering, and theft. Mitigation strategies include:

- AI model encryption and embedding

- Data encryption

- Whitebox encryption of cryptographic keys

- Confidential computing

- Secure data transit over networks

- Anti-tampering mechanisms

- Anti-code lifting mechanisms

- Code obfuscation

- Anti-dynamic analysis techniques

- Platform integrity checks

- Hardware-level security features

Implementing these measures is crucial for protecting sensitive information, ensuring responsible AI deployment, maintaining data integrity, and safeguarding intellectual property in Edge AI ecosystems.

Are you ready to scale your enterprise?

Explore

What's New In The World of Digital.ai

Announcing Quick Protect Agent: MASVS-Aligned Protections, Now Easier Than Ever

Easily apply OWASP MASVS-aligned protections to your mobile apps—no coding needed. Quick Protect Agent delivers enterprise-grade security in minutes.

“Think Like a Hacker” Webinar Recap: How AI is Reshaping App Security

Discover how generative AI is reshaping app security—empowering both developers and hackers. Learn key strategies to defend against AI-powered threats.

The Encryption Mandate: A Deep Dive into Securing Data in 2025

Discover how white-box cryptography and advanced encryption help enterprises secure sensitive data, meet compliance, and stay ahead of cybersecurity threats.