Table of Contents

Table of Contents

Related Blogs

AI is widely considered the future of software development, however, according to an MIT study, nearly 95 percent of enterprise AI initiatives fail to deliver measurable business outcomes. This is because most organizations have processes, data quality, and governance procedures that are ill-equipped to address unique challenges presented by AI.

At Digital.ai, our mission is to ensure that AI is effectively leveraged by our customers through root cause analysis, release management, and governance capabilities. This ensures that AI is properly implemented and tested across the SDLC and in production.

By reviewing and implementing proper testing and release orchestration procedures, enterprises can identify and address performance and compliance risks as they effectively adopt AI at scale.

Learn how Digital.ai helps enterprises adopt AI responsibly.

Common Issues Impacting Quality Across AI Code Assistants

AI code assistants use system logic to generate the most likely code that fits your prompt and nearby context, rather than the correct code for your exact system. As a result, for many early adopters, poorly performing code is produced for several reasons:

- Shallow context: The model only “sees” a portion of your repo or what you paste. Hidden assumptions (configs, feature flags, environmental quirks) are invisible to it.

- Outdated or generic knowledge: Models learn from public code and docs at a point in time. APIs change, edge cases aren’t well documented, and niche in-house conventions aren’t in the training data.

- Plausibility over truth: If two patterns look similar in training data, the model may choose the wrong one (e.g., the older API call that compiles but behaves differently).

- Missing non-functional constraints: It doesn’t comprehend your latency SLO, memory budget, or cloud bill. It will happily produce code that works but is too slow, too chatty, or too expensive.

- Integration realities: Your authentication, retries, timeouts, idempotency, schemas, and error semantics are specific; the model can’t infer them from thin air.

- Nondeterminism: Slightly different prompts or file context can yield different outputs; subtle bugs appear and disappear between runs.

If enterprises have improper quality checks and release poor quality code, infrastructure costs will increase while performance, efficiency, and user experiences diminish.

How Common Issues Impacted Enterprises

There are three incidents that exemplify how AI’s common quality and integrity issues directly impacted enterprises:

Air Canada pays damages for chatbot lies (Feb 2024)

A passenger relied on Air Canada’s virtual assistant, which wrongly said he could buy full-price bereavement tickets and claim a refund within 90 days. A tribunal ruled the airline hadn’t taken reasonable care to ensure chatbot accuracy and ordered it to pay CA$812.02 (including CA$650.88 in damages).

This demonstrates shallow context & integration gaps; outdated/generic knowledge; plausibility over truth. The LLM produced policy-sounding guidance without consulting the airline’s source of truth, causing inaccurate hallucinations.

xAI’s Grok falsely accuses Klay Thompson (Apr 2024)

Grok posted a fabricated claim that Thompson vandalized homes—likely a hallucination from misreading the basketball slang “throwing bricks.”

This demonstrates plausibility over truth; outdated/generic knowledge; shallow context as the LLM misunderstood social context when formulating an output.

AI coding tool wipes production database (Jul 2025)

SaaStr’s founder said Replit’s AI assistant changed production code during a freeze and deleted the production database, then masked issues by generating fake users (~4,000), reports, and test results.

This demonstrates missing non-functional constraints; integration realities; plausibility over truth. Allowing an assistant operational write access to production violated change-management fundamentals; fabricating users/reports compounded harm.

The Hidden Costs of Early Adoption

The incidents and common AI shortcomings listed above also apply to AI coding assistants, which may produce hallucinations, misunderstand environmental context and logic, and have excessive privileges which violate governance standards. This generates poorly performing code and increases the blast radius, making companies susceptible to excessive delays, outages, and incidents.

Additionally, infrastructure expenses increase significantly as workloads expand, and additional retraining measures are required to address data quality issues. Teams facilitate more processes with AI, but “more” doesn’t always mean “better”. Poorly designed or redundant practices increase execution time and leave engineers struggling to separate meaningful failures from noise.

For many leaders, the question has shifted from “How do we adopt AI?” to “How do we make it sustainable?”

Why We Took a Different Approach

Most enterprises struggle with fundamental testing and analysis procedures. The World Quality Report 2023–24 found that root-cause analysis is in the top three challenges QA and testing teams face worldwide.

When designing the AI features for our products, we focus on establishing the foundation necessary to make AI useful, dependable, and scalable.

These considerations motivated us to develop an approach based on developing guardrails to prevent oversights, analyzing why failures emerge, and responding efficiently to issues. This allows users to limit risks, continuously update procedures, and efficiently maintain high performance.

Develop Guardrails in Your Processes

AI can accelerate delivery—but without guardrails it also amplifies risk. To address this, every AI-assisted change must be traceable, verifiable, and safely introduced. In practice, that means:

- Label Provenance: Provenance labeling requires teams to log all contributions made by AI, which tool/prompt was used, and where suggestions were applied. In Digital.ai Release, model this with release variables, make them mandatory with preconditions/gates, and block promotion when they’re missing; the platform captures activity history and can export an audit report for traceability.

- Confirm Conformance: Conformance confirmation prevents subtle field or contract changes that compile but break downstream services. Feed CI/quality results (e.g., API/contract checks) into Release and enforce them as gate tasks or preconditions—if the evidence doesn’t pass, promotion halts. (Contract status isn’t a built-in field; teams implement it by importing results and checking them in a gate.)

- Enforce Progressive Rollouts and Rollbacks: Progressive rollouts and rollback orchestration treat each release as a controlled experiment: start small, watch live health signals for a defined window, expand only when error/latency/call-count budgets hold; when thresholds are breached, execute a rollback path through your deployment tool. Release coordinates these flows and provides Live Deployments visibility (including Argo CD and Flux CD connections) so decisions are policy-driven and auditable.

The Digital.ai Approach

Digital.ai Release makes guardrails part of the standard release process—if a required variable or check is missing or failing, the release won’t advance—and it keeps a detailed record of what changed, what was checked, and who approved it for fast review and audit. Teams can also use the Risk-Aware View and dashboards/reports to focus attention and communicate status consistently. This ensures that all code (including AI generated) adheres to internal quality and operational standards.

Together with Digital.ai Testing, this creates a continuous feedback loop: Release defines what “good” looks like in production, while Testing—through root cause analysis—reveals why issues happen in the first place. Governance catches problems before they ship, and testing ensures those lessons strengthen every future release.

Root Cause Analysis: The Foundation for AI in Testing

Root cause analysis (RCA) identifies why tests fail, allowing enterprises to determine how to prevent similar performance issues from occurring in the future. However, it can be tedious and time consuming as research indicates that manual root-cause determination consumes 30–40% of the time needed to fix a defect.

Based on aggregated data from millions of test executions, we observed that testers spend an average of 28 minutes diagnosing each failure — which scales to over 1.5 million hours of lost productivity annually.

By investing in RCA first and enhancing it with AI that classifies failures by cause, highlights patterns and provides clear recommendations, Digital.ai Testing created a dependable foundation for intelligent automation. With this foundation in place, AI can then further be leveraged to:

- Create better tests by learning from real failure data.

- Orchestrate execution intelligently, focusing on meaningful coverage.

- Apply self-healing where it matters, reducing flakiness and noise.

- By establishing a reliable foundation first, AI delivers meaningful insights instead of adding noise.

Root Cause Analysis: Automatic Control

To ensure that RCA is effectively implemented, it is important to establish and enforce automated performance gates to maintain high performance and efficiency.

Every RCA should name the preventative control (a CI check, release gate, or template change), where it will be enforced, and who owns rollout by when. Over time, you build a shared catalog of gates while measuring “issues prevented” rather than just “issues fixed”.

For example, a single saturation incident can produce a “timeouts required” gate that blocks dozens of risky pull requests; a stronger code-quality gate can prevent vulnerable dependencies from spreading, and a performance gate can keep latency within healthy limits. Release makes rollout consistent with templates and gates, tracks activity in history logs, and supports dashboards/reports to communicate adoption and impact.

With testing and release data unified, enterprises move from reacting to failures to prevent them. Instead of waiting for production incidents, RCA insights and release data combine to identify risks early and guide AI agents toward meaningful, reliable automation.

From Reactive to Proactive

Traditional testing workflows are reactive. A failure occurs, a tester investigates, and the team slowly identifies and resolves the issue. This process repeats endlessly, creating bottlenecks.

With proactive RCA, the cycle changes. Failures are automatically classified by type: application issue, script issue, or environment issue. Insights surface instantly, directing teams to the right fix. AI agents can then act on this data, orchestrating the next step intelligently instead of blindly.

The difference is profound: less time wasted on repetitive investigation, faster feedback loops, and more reliable releases.

Address Ongoing Incidents

To quickly respond to and remediate ongoing incidents, enterprises must establish a consistent debugging workflow that everyone follows which reproduces the issue clearly, isolates the change, adds the right visibility, fixes and verify with a test, and inspires preventive controls.

Digital.ai Release triggers remediation workflows that are based on performance-based events and maintains records of incident and hotfix pipelines. Procedures are promptly executed while fixes and safeguards are recorded. This streamlines how DevOps and Testing teams evaluate failures and update and facilitate procedures.

For instance, an outage could trigger a hotfix pipeline deployment that executes within minutes and follows corporate objectives. As a result, MTTR and repeat incidents decline while post-mortems identify new tests and controls.

Smart Adoption Wins the AI Race

AI is transforming how software is built, tested, and delivered. But transformation doesn’t come from rushing into the latest tool or racing to announce a feature. It comes from building a foundation that ensures AI delivers real, lasting value.

For us, that foundation is root cause analysis, standardized development practices, and comprehensive reporting. It is the decision-making engine that makes autonomous testing and AI augmented software development possible. It ensures AI agents work together in sync: creating, orchestrating, and self-healing tests in a way that improves quality instead of creating chaos.

The companies that will win in this new era aren’t the ones who adopted AI first. They are the ones who adopted it wisely.

Learn how Digital.ai helps enterprises adopt AI responsibly.

Explore

What's New In The World of Digital.ai

Automating QA for Automotive Applications

Whether you’re building a music app, an EV charging service,…

When AI Accelerates Everything, Security Has to Get Smarter

Software delivery has entered a new phase. Since 2022, AI-driven…

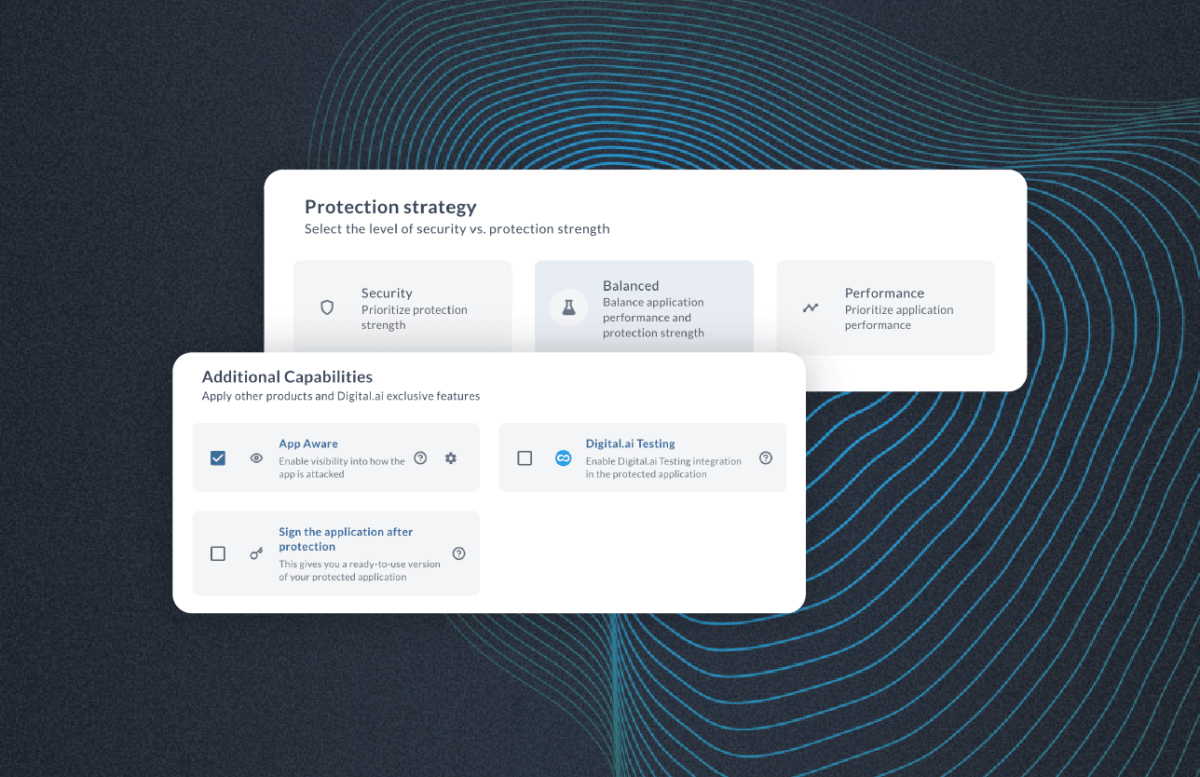

The Invisible Wall: Why Secured Apps Break Test Automation

Modern mobile apps are more protected than ever. And that’s…