Table of Contents

Table of Contents

Related Blogs

What is AI Security?

While AI can enhance cybersecurity efforts by identifying and predicting threats, it also presents new challenges. Adversarial attacks, data privacy concerns, and the potential misuse of AI systems pose serious risks. Additionally, as AI systems often operate autonomously, ensuring they are safe from manipulation, theft, and unintended consequences is critical to maintaining trust. AI security is the practice of safeguarding AI models, data, and infrastructure from threats that could undermine their integrity, accuracy, or ethical use.

This article will delve into the fundamentals of AI security, why it is essential, its key challenges, and strategies for improving it. We will also explore how regulation and compliance frameworks shape the landscape of AI security across various sectors, as well as AI’s role in application security. Understanding these basics is crucial for organizations looking to harness AI’s power while minimizing the associated risks.

The Importance of AI Security

The importance of AI security lies in protecting the integrity, confidentiality, and availability of AI systems, which are increasingly driving critical decision-making processes across industries. As AI becomes more embedded in areas like healthcare, finance, and autonomous systems, its vulnerabilities become attractive targets for cyberattacks. A compromised AI system can lead to inaccurate decisions, data breaches, or even operational failures, with potentially catastrophic consequences. Ensuring robust AI security is essential not only for safeguarding sensitive data but also for maintaining trust in AI-driven technologies and preventing malicious exploitation of these powerful tools.

Key Challenges in AI Security

Cyber Threats to AI Systems

AI systems face a range of cyber threats that can compromise their functionality, accuracy, and reliability. One of the most significant threats is adversarial attacks, where malicious actors subtly alter input data to deceive AI models, causing them to make incorrect predictions or decisions. Another critical threat is model theft, where attackers reverse-engineer or extract proprietary AI models by querying them repeatedly. Additionally, data poisoning can occur when attackers manipulate the training data, leading to skewed or biased AI outputs. Model inversion attacks also pose a risk, allowing attackers to infer sensitive information from the AI model itself. As AI continues to be integrated into sensitive operations, understanding and mitigating these threats is crucial to preventing large-scale disruptions and protecting both users and organizations.

Data Privacy Concerns

AI systems often require a vast amount of data to function effectively, raising significant data privacy concerns. Many AI models are trained on sensitive personal information, such as healthcare records, financial transactions, or user behavior patterns, making them prime targets for breaches. Without proper safeguards, unauthorized access to this data can lead to identity theft, financial fraud, or unauthorized profiling. Moreover, privacy risks extend to model inversion attacks, where attackers can infer private information from a trained AI model, even without direct access to the original dataset. As regulatory frameworks like GDPR and CCPA impose strict data usage and protection rules, ensuring AI systems comply with these standards is critical. Implementing privacy-preserving techniques, such as differential privacy or federated learning, can help organizations mitigate these concerns while still leveraging AI’s capabilities.

The Black Box Problem in AI

The “black box” problem in AI refers to the lack of transparency in how many AI systems, particularly those based on deep learning, make their decisions. These models often operate in complex, opaque ways, making it difficult for humans to understand the rationale behind their predictions or actions. This lack of interpretability poses significant risks in areas where accountability and trust are critical, such as healthcare, finance, or criminal justice. If an AI system makes an incorrect or biased decision, stakeholders may struggle to trace the source of the error or challenge the model’s output. This opacity can hinder trust in AI systems and limit their adoption in sensitive applications. Addressing the black box problem is essential for enhancing AI security, as transparent and interpretable models allow for better oversight, debugging, and assurance that the system behaves as expected. Techniques like explainable AI (XAI) are emerging to tackle this challenge by making AI decision-making processes more understandable and transparent to users.

Without knowing how a system works internally, attackers can more easily exploit unknown weaknesses by feeding it carefully crafted inputs to produce desired, potentially harmful outcomes.

Suppose an AI system is used to detect suspicious activity, but its decision-making process is not transparent. In that case, it can be difficult to understand why it flagged a particular action as suspicious, potentially leading to false positives or missed threats.

Strategies for Enhancing AI Security

Implementing Robust Authentication and Authorization

One of the foundational strategies for enhancing AI security is ensuring robust authentication and authorization mechanisms. AI systems often handle sensitive data and perform critical functions, making it essential to tightly control who can access and interact with these systems. Multi-factor authentication (MFA) and role-based access control (RBAC) are key methods for verifying the identity of users and restricting access to authorized individuals only. By implementing these security measures, organizations can prevent unauthorized users from manipulating AI models, altering datasets, or accessing sensitive insights derived from the AI system. Additionally, authentication is critical in securing APIs that interact with AI models, safeguarding against attacks where malicious actors attempt to steal, manipulate, or query AI models for malicious purposes. Properly enforced authentication and authorization not only protect the AI infrastructure but also reduce the risk of data breaches and adversarial interference.

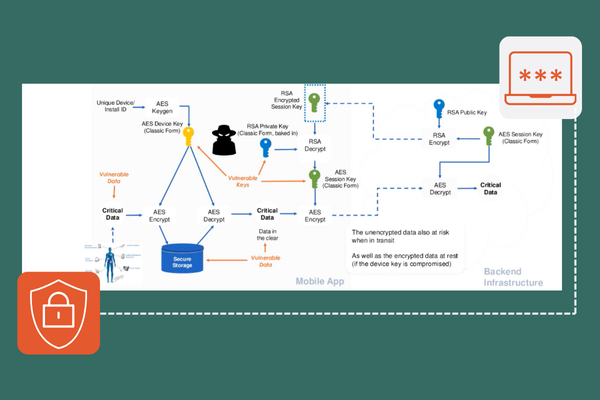

Ensuring Data Integrity and Privacy

Ensuring data integrity and privacy is crucial for maintaining the security and reliability of AI systems. AI models rely heavily on data, and if this data is tampered with or compromised, the outputs can become inaccurate or even harmful. Data integrity measures, such as encryption, hashing, and secure storage protocols, help protect the data used in AI from unauthorized alterations during transit or storage. This prevents attackers from injecting malicious data that could skew AI model outcomes or poison the training process. On the privacy front, implementing techniques like differential privacy and homomorphic encryption allows organizations to use personal or sensitive data in AI models while safeguarding the identities of individuals. These methods ensure that AI systems can still provide valuable insights without exposing confidential information, reducing the risk of privacy violations or regulatory non-compliance. By securing data integrity and privacy, organizations strengthen the reliability and trustworthiness of their AI systems, protecting them from malicious manipulation and privacy-related vulnerabilities.

Regularly Updating and Patching AI Systems

Regularly updating and patching AI systems is critical for protecting them from emerging threats and vulnerabilities. Like traditional software, AI systems can develop security flaws over time, whether through discovering new vulnerabilities in the underlying software, libraries, or dependencies or through advancements in attack methods that target AI models. Organizations can address these weaknesses by consistently applying updates and security patches before attackers exploit them. Regular patching also helps mitigate risks associated with third-party tools or frameworks that AI systems often rely on. Furthermore, outdated AI models may be more susceptible to adversarial attacks or model theft. Keeping AI systems up-to-date improves their security posture and ensures they continue to perform optimally in a rapidly evolving threat landscape. A robust patch management process is essential for maintaining the security and integrity of AI systems in both development and production environments.

Securing AI Models and Algorithms

Securing AI models and algorithms is a vital strategy for protecting the core intelligence behind AI systems. These models are valuable assets that, if compromised, can lead to a range of security risks, including intellectual property theft, manipulation, and exploitation by malicious actors. Model stealing attacks, where attackers attempt to recreate a proprietary AI model by repeatedly querying it, pose a significant threat, potentially allowing competitors or adversaries to reverse-engineer the model. To mitigate this, organizations can employ techniques like rate limiting, model watermarking, and encrypted model deployment to safeguard the integrity of their AI models.

Additionally, AI models can be susceptible to adversarial attacks, where carefully crafted inputs are used to deceive the model into making incorrect predictions. Defensive techniques such as adversarial training, which helps the model become resilient to such attacks, and runtime monitoring, which detects abnormal input patterns, are essential for securing AI algorithms. Securing models and algorithms is fundamental to preventing attackers from manipulating AI systems or extracting sensitive insights, ensuring the AI continues functioning as intended in a secure environment.

Monitoring and Anomaly Detection

Monitoring and anomaly detection are critical components of any AI security strategy, ensuring that AI systems are continuously observed for unusual behavior that could indicate an attack or malfunction. By implementing real-time monitoring, organizations can track the performance and activity of AI models to quickly identify deviations from normal operations, such as unexpected inputs or outputs, changes in model behavior, or unauthorized access attempts. Anomaly detection algorithms are beneficial for identifying subtle, hard-to-detect attacks, such as adversarial inputs or data poisoning, where traditional security methods might fail. These algorithms can flag irregularities that suggest a model is being manipulated or compromised. In addition, anomaly detection systems can help spot insider threats or malicious users attempting to manipulate AI systems. Proactive monitoring, combined with advanced anomaly detection, strengthens the resilience of AI systems by providing early warnings of potential threats and enabling swift responses to mitigate risks before they lead to significant damage.

The Role of Regulation and Compliance in AI Security

Overview of AI Security Regulations

Several regions globally have enacted or are in the process of developing AI security regulations, each taking a unique approach based on local priorities:

- European Union: The EU’s AI Act, effective in stages starting in late 2024, categorizes AI applications by risk level and imposes stricter controls on high-risk systems, including requirements for independent audits, transparency, and safety measures. The Act specifically bans certain practices, such as social scoring and real-time biometric surveillance, and mandates transparency for minimal-risk AI applications like chatbots. The EU also emphasizes digital sovereignty with complementary acts like the Digital Services Act (DSA) and Digital Markets Act (DMA), both of which aim to protect consumer rights and limit harmful content spread on large digital platforms.

- United States: While the U.S. lacks a unified AI law, it has several guidelines and frameworks, such as the Blueprint for an AI Bill of Rights and NIST’s AI Risk Management Framework. A recent Executive Order also directs federal agencies to assess AI systems for safety and bias. At the state level, California, New York, and Florida have led with legislation focusing on transparency, particularly in applications like autonomous vehicles and generative AI, while some states have proposed laws to curb AI discrimination, especially in hiring practices.

- China: China has stringent regulations targeting AI-driven technologies like deepfakes, requiring explicit labeling and security oversight for all AI-generated content. Localized policies in AI hubs such as Shanghai and Shenzhen also support industry development under graded management, allowing innovation while ensuring compliance with ethical and security standards.

- Canada: The Canadian AI and Data Act (AIDA) addresses high-risk AI applications, focusing on human rights and data privacy. Canada also has a Directive on Automated Decision-Making for federal systems, which mandates standards to ensure fairness and effectiveness in government AI usage.

These regulations reflect a varied approach, with the EU and China pursuing strict, centralized control. At the same time, the U.S. and Canada favor guidance and voluntary compliance frameworks to balance innovation and security needs.

AI in Application Security

Securing AI Models and Data in Client-Side Applications

Embedding AI into client-side applications creates a significant challenge in securing the models and the data they process, as these applications are highly exposed to threat actors. One of the most effective ways to protect AI models in this context is through code obfuscation, which makes the underlying codebase difficult for attackers to analyze and reverse-engineer. By obfuscating the AI model code, developers can prevent malicious actors from easily understanding how the model operates, reducing the risk of intellectual property theft or model manipulation.

In addition to obfuscation, implementing anti-tamper measures is essential for detecting and preventing unauthorized modifications to the AI model or the application itself. Anti-tamper mechanisms can trigger alerts or halt execution when threat actors attempt to alter the model, thereby protecting the integrity of the AI system and the sensitive data it processes. Together, code obfuscation and anti-tamper measures form a robust defense, making it significantly more difficult for attackers to exploit AI models embedded in client-side applications.

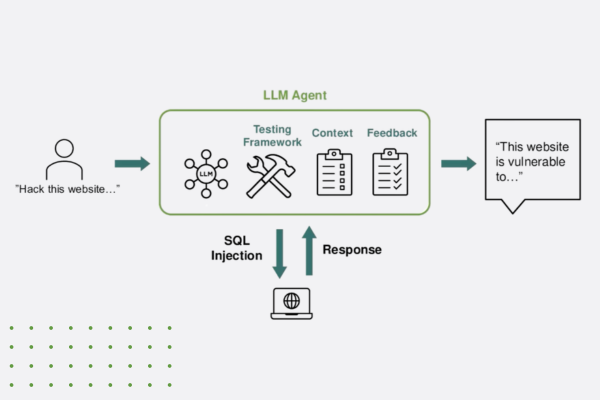

How AI Changes the Traditional Threat Landscape for Applications

The integration of AI into applications fundamentally changes the traditional threat landscape by introducing new attack surfaces and vulnerabilities. Unlike traditional applications, AI models can be susceptible to adversarial attacks, where malicious inputs are designed to fool the model into making incorrect predictions or decisions. This creates a unique security challenge, as attackers can manipulate AI behavior without directly compromising the application’s core infrastructure. Additionally, AI models often rely on large amounts of data, which, if improperly secured, opens up new avenues for data breaches and privacy violations.

AI also shifts the threat landscape by increasing the potential for model theft and data poisoning, where attackers either steal the AI model for reverse engineering or manipulate training data to alter the model’s outputs. This makes securing the AI lifecycle—from data collection and model training to deployment and updates—more complex than traditional software security. Organizations must account for these new risks by incorporating advanced security measures, such as adversarial defense strategies, model monitoring, and encryption, to ensure their AI-enhanced applications remain resilient in an evolving threat environment.

Secure Applications with Digital.ai Software

Explore

What's New In The World of Digital.ai

Announcing Quick Protect Agent: MASVS-Aligned Protections, Now Easier Than Ever

Easily apply OWASP MASVS-aligned protections to your mobile apps—no coding needed. Quick Protect Agent delivers enterprise-grade security in minutes.

“Think Like a Hacker” Webinar Recap: How AI is Reshaping App Security

Discover how generative AI is reshaping app security—empowering both developers and hackers. Learn key strategies to defend against AI-powered threats.

The Encryption Mandate: A Deep Dive into Securing Data in 2025

Discover how white-box cryptography and advanced encryption help enterprises secure sensitive data, meet compliance, and stay ahead of cybersecurity threats.