Table of Contents

Related Blogs

Pitter Patter

In last week’s month’s episode of AI weekly (most weeks excluded) I expressed thoughts around how LLMs are being used to help accelerate malicious attacks, and the growing need for a comprehensive security strategy. While I’m sure no one reading this uses the same password on multiple websites, plenty of vulnerable application end users do. Fortunately, LLMs help anyone that asks; the security space hasn’t been passive in the AI adoption wave. From helping to write code, reviewing pull requests, and sifting through mountains of data, AI has been there every step of The Way. That is, if we specifically define The Way as the time from November 30th, 2022, and on.

Just like any cat and mouse game worth playing, both the cats and mice must adapt to the changing environment around them, and we’ve been laser focused on the ways AI can help protect against malicious attackers. While AI is accelerating attackers, it’s also accelerating the defensive side of the equation. The same technology that empowers attackers also provides defenders with powerful tools to build more intelligent, resilient security systems.

Whatchyadoin With All That Data Over There, Bud?

Let’s start with a straightforward example. As writing code becomes increasingly democratized, we’ve seen a substantial spike in botting. Beyond just the sheer numerical increase, it’s also becoming difficult to differentiate bots from real end-users. This pandemic hits many industries, but few are impacted as heavily as the gaming industry. Like Captain Rex said in Star Wars Rebels, “This place used to be crawling with them. We called them ‘clankers’”. Rampant bot presence is cutting into the gaming industry in a big way. They harm in-game economies, scare real paying players away, and run around in some of the ugliest fashion I’ve ever seen. Detecting them isn’t easy, but it’s getting easier as the training data on player vs. bot behavior grows.

Any good detection strategy revolves around one fundamental question: what’s different? Differentiating player behavior from bot behavior with nearly flawless accuracy is just a matter of behavioral or environmental data collection. Consider questions like:

- How does the player’s mouse move? Is it inconsistent and slightly shaky, or does it always pass through the exact same x, y coordinate pairs over thousands of actions?

- What information can we get from a phone’s gyroscope? Has it moved at all? Is there even a finger pressing the screen?

- Does it make sense that this OSRS account has 99 hunting, 1 in every other skill, and is logged off for exactly 10 seconds once every 60 minutes on the dot?

The application threat detection scene is much the same. Instead of the above questions we ask questions like:

- What guards have run, and in what order?

- Which device make and models are most likely to be used in attacks?

- Has an end-user just logged into their account while being 1,609.34 Kilometers (1000 miles in Freedom Units) away from their last login? Did they go coast to coast in three minutes?

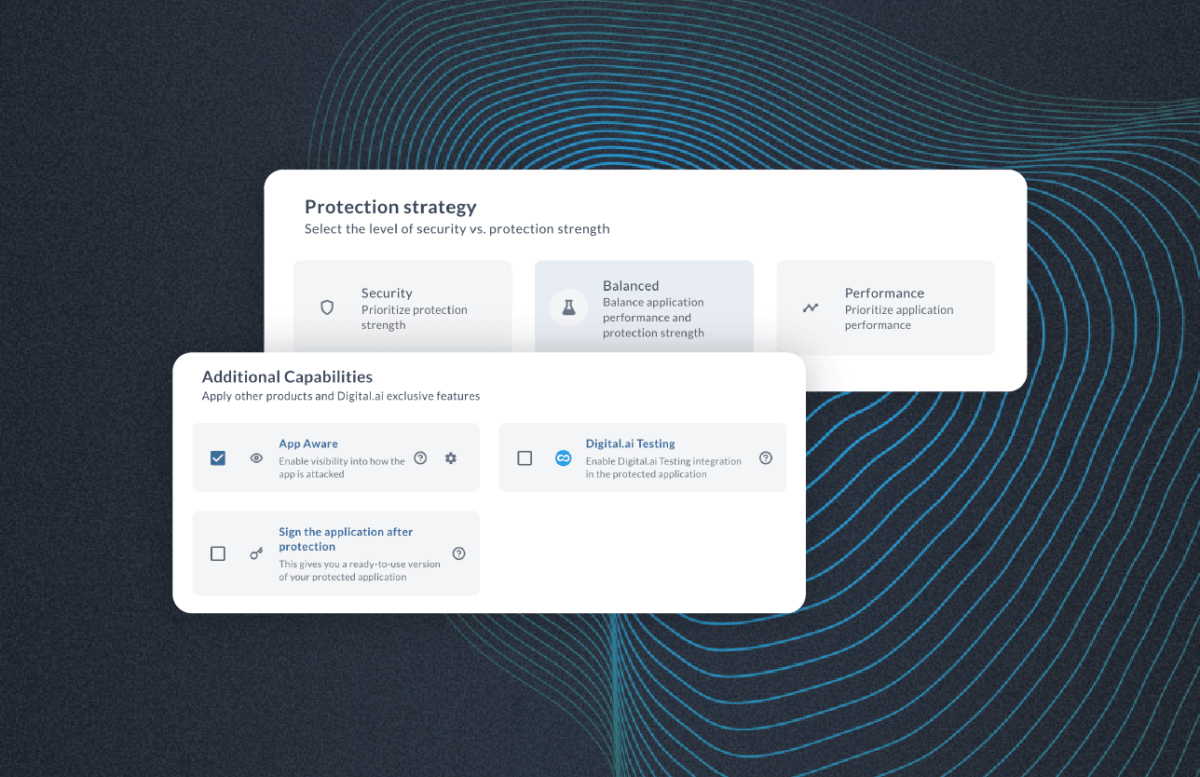

Answering all of these questions is possible with enough data collection and processing. That’s where AI comes in. We can, through App Aware data as seen in our threat report, use our AI & LLM experience to identify the risk level of any given user based on their behavior. Sometimes a jailbroken device is just Grandma Mabel trying to change the theme of her iPhone, but when Grandma starts triggering checksum tamper actions, we start to wonder why her ears are so big.

That’s What I Appreciates About AI

To give a more relevant use-case in application security, there are valuable bits and bytes of information all throughout an application’s runtime. An application’s stack and heap are constantly modified, but often in ways that cause clear patterns. The stack, a region of memory that stores local variables, function parameters, and return addresses, provides a real-time record of a program’s execution flow. Just looking through an application’s execution flow can identify the needle in the haystack, or the corn plant in the soybean field. If, for a specific payment processing application, 99.9% of application executions always call openPaymentOverlay then validateToken then transferPayment:Value we can use that control flow “signature” to identify legitimate users. The 0.1% of application executions that call transferPayment:Value without the two preceding calls would stick out like a sore thumb in that dataset. Examples like this can leverage AI’s rapid processing of data pools to allow for increasingly advanced detection mechanisms. While application hardening can leverage LLMs to create meaningful and unique detection mechanisms, it’s not the only security segment that benefits.

Ope, Found Yourself a CVE

Not much to explain here, just roll the footage.

CVEs (Common Vulnerabilities and Exposures) are an important part of any organization’s security posture. Generative AI can help identify them, and code assist LLMs can help update a dependency chain to solve the problem. This is a common use -case in our current security environment, and as both attackers and AI continue to advance, we’ll see the battlefield shift further towards client-side vulnerability exploitation.

Got Ourselves a Donnybrook, eh?

Whenever new, substantial, technological advances emerge, they upset the balance between any ongoing fights. Back when my parents were born, it was gunpowder; today it’s AI. Every defensive measure that exists, from Firewalls to Application Hardening, is a requirement to protect end users and companies alike. The best way to limit costly attacks like the Change Healthcare breach is to safeguard any information related to end users and company operations. AI is the hammer made to help drive the nail of big data into the beam of security. It’s not just any beam either. It’s a load bearing beam. It’s, like, really really important, and probably made out of super expensive wood. Brazilian Rosewood if I had to guess. It’s critical that security teams, across all security segments, understand and leverage AI to help stay ahead of the growing arms race.

There are substantive concerns in the field of security as the capability and usage of LLMs continues to grow, but it’s not all bad news. We’ll continue to do our best to make the online world a safer place for all of us. Whether the threats are OSRS bots, or the inexplicably technically advanced Grandma Mabel, we’ll adapt and advance.

Are you ready to scale your enterprise?

Explore

What's New In The World of Digital.ai

When AI Accelerates Everything, Security Has to Get Smarter

Software delivery has entered a new phase. Since 2022, AI-driven…

The Invisible Wall: Why Secured Apps Break Test Automation

Modern mobile apps are more protected than ever. And that’s…

Evolving Application Security Documentation, One Step at a Time

In 2024, the documentation team at Digital.ai launched a new…